google

home

hub

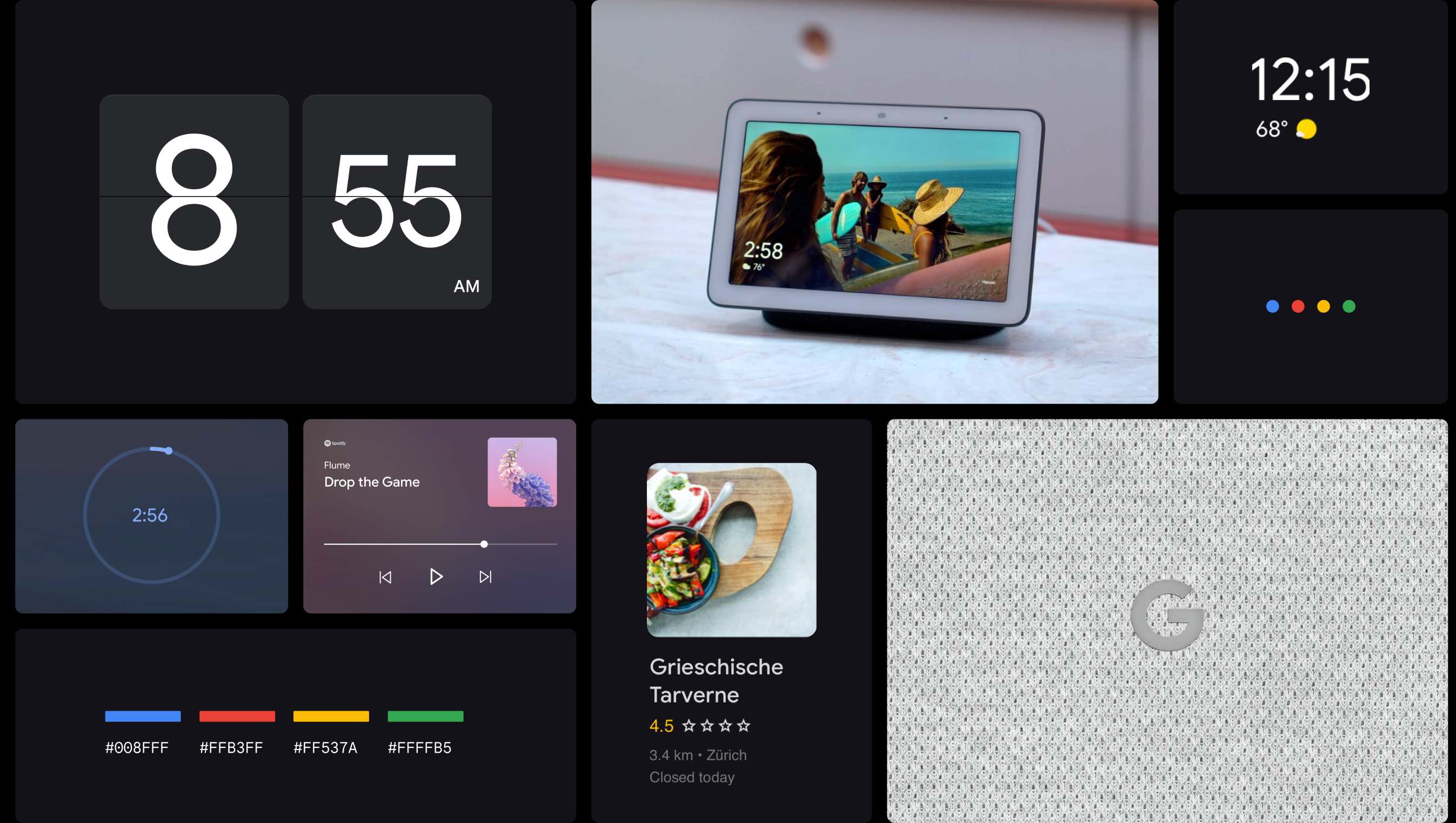

Co-initiated the Home Hub project with a small team in Zurich. Led the early design phase in 2017 defining overall framework, spatial model, and core interaction principles.

Home Hub is a speaker and a photo frame, with a glanceable voice Assistant built-in. Rather than solely relying on pre-existing touch UI patterns, we set out to craft a fully voice-forward multi-modal device.

The Google Logo Family with its motion based system for voice served as the foundation upon which we drew the system UI.

-

GM Blue 500

- RGB — 66 133 244

- CMYK 88 40 0 0

- HEX — #4285F4

-

GM Red 500

- RGB — 234 67 53

- CMYK — 0 87 89 0

- HEX — #EA4335

-

GM Yellow 500

- RGB — 251 188 4

- CMYK — 0 37 100 0

- HEX — #FBBC05

-

GM Green 500

- RGB — 52 168 83

- CMYK 85 0 92 0

- HEX — #34A853

Spatial model

We applied the principles of ambient computing to create a device that’s always available for quick action but becomes an extension of the home when idle.

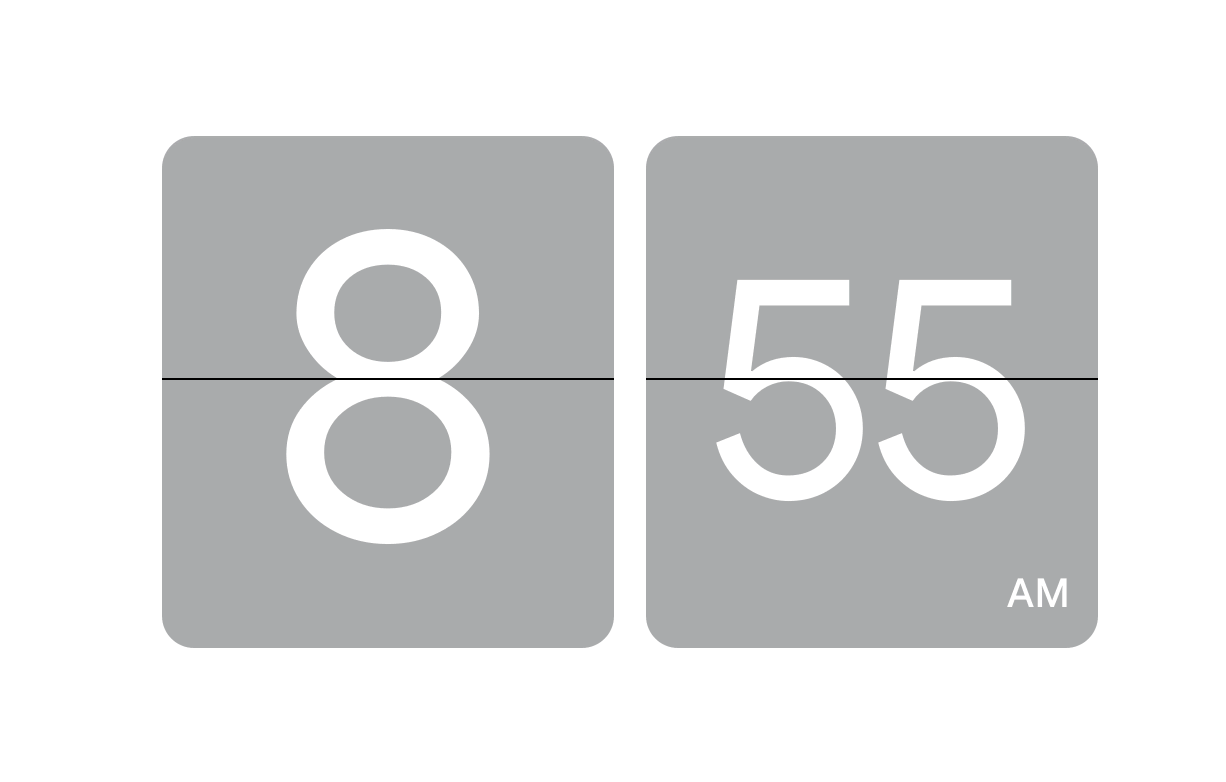

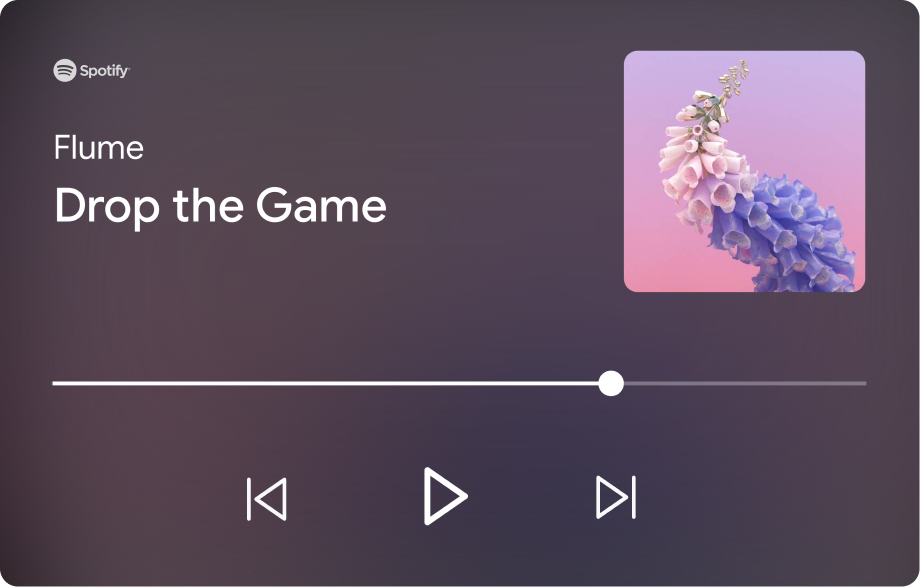

The spatial model was composed of foundational elements like time, photo albums, home controls, quick settings, and ongoing activities (e.g. timers, alarms, and media). Answers would appear in z-space.

Our approach was based on the foundational ideas of calm technology. In order to imbue focus, clarity, and calmness, we introduced a guiding principle that became a core tenent of the project: the one thing at the time approach.

While the concept of one thing led to more focus, it also inevitably introduced many limitations.

After exploring a myriad of models, we went for a system where saying “Ok Google” took over the entire screen. This improved readability, focus, and visual feedback for farfield use. But it came at the cost of virtually eliminating all multi-tasking use cases.

To allow for continued interaction and mental white-space, a floating input plate gave users the option to re-open the mic manually.

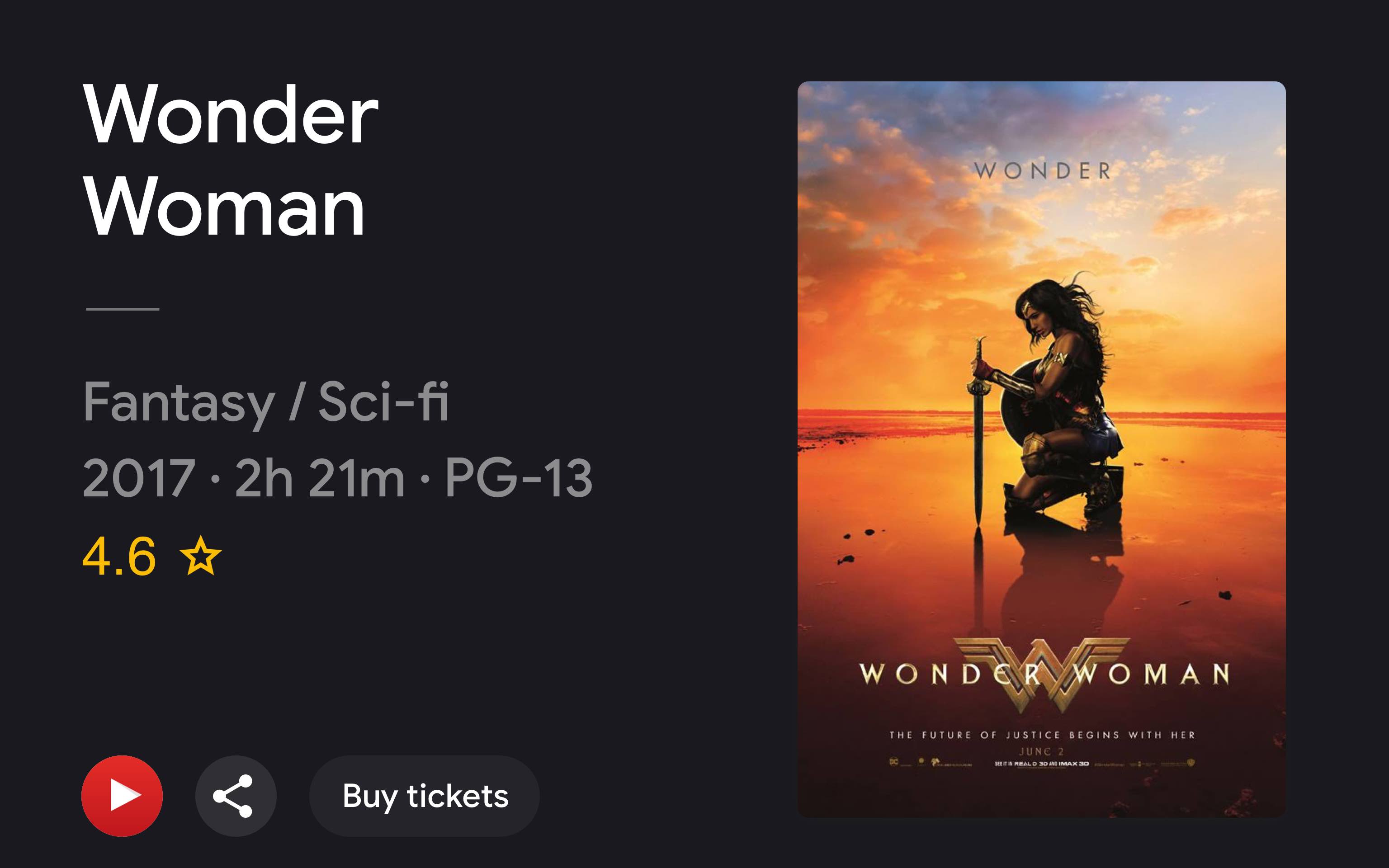

We crafted template system to render a wide range of entity types like places, people, and media. This included designing conversational framework patterns like browse and refine, disambiguation, and pivots.

The nature of this project challenged us to think about how people interact with devices in an era of ubiquitous computing. It’s exciting to see how the team evolved the product and made it better in so many ways.

You can watch the launch video below.

Special thanks to Evan Malahy, Matt Stokes, Andrea van Scheltinga and everyone who contributed to this effort.